Yes, you read that right: generative AI can make you less efficient. We hear everywhere of the benefits of generative AI—it will help us to produce better content more efficiently. But that isn’t always true, especially in regulated industries.

What is generative AI?

Let’s back up a bit and define what we mean. Generative AI, as its name suggests, uses artificial intelligence to create something new in response to user prompts. A type of generative AI is large language models (LLMs), which can be used to generate text-based content—articles, papers, emails, etc. LLMs can be so good that their writing output can sometimes be indistinguishable from human-created content.

There are different types of LLMs—public and private. There are several public LLMs, such as ChatGPT, that can be used by businesses to create content. However, they come with some limitations, such as data privacy, bias, and potential hallucinations. To help address these limitations, many businesses are creating their own LLMs. These private LLMs are typically built on top of the public LLMs and then fine-tuned with company- and industry-specific data.

Now, with an understanding of generative AI, let’s explore three use cases to show how using generative AI may lead to inefficiencies in regulated industries: content creation, chatbots, and phone representatives.

Content creation

Marketing teams are beginning to use AI tools to create content. Most of these tools use LLMs. No matter the type of LLM, public or private, the result is more content faster. Marketers can use prompts to have AI create a first draft in seconds. They can then edit the copy to create a draft for compliance review. Even with the added editing, the process is much faster. However, if the business is in a regulated industry, the marketers are likely creating more non-compliant content faster that will only overwhelm the compliance department. That’s because most LLMs don’t know what is and isn’t compliant. This is an example of how generative AI can make an organization overall less efficient. One team’s efficiency can easily create another team’s bottleneck.

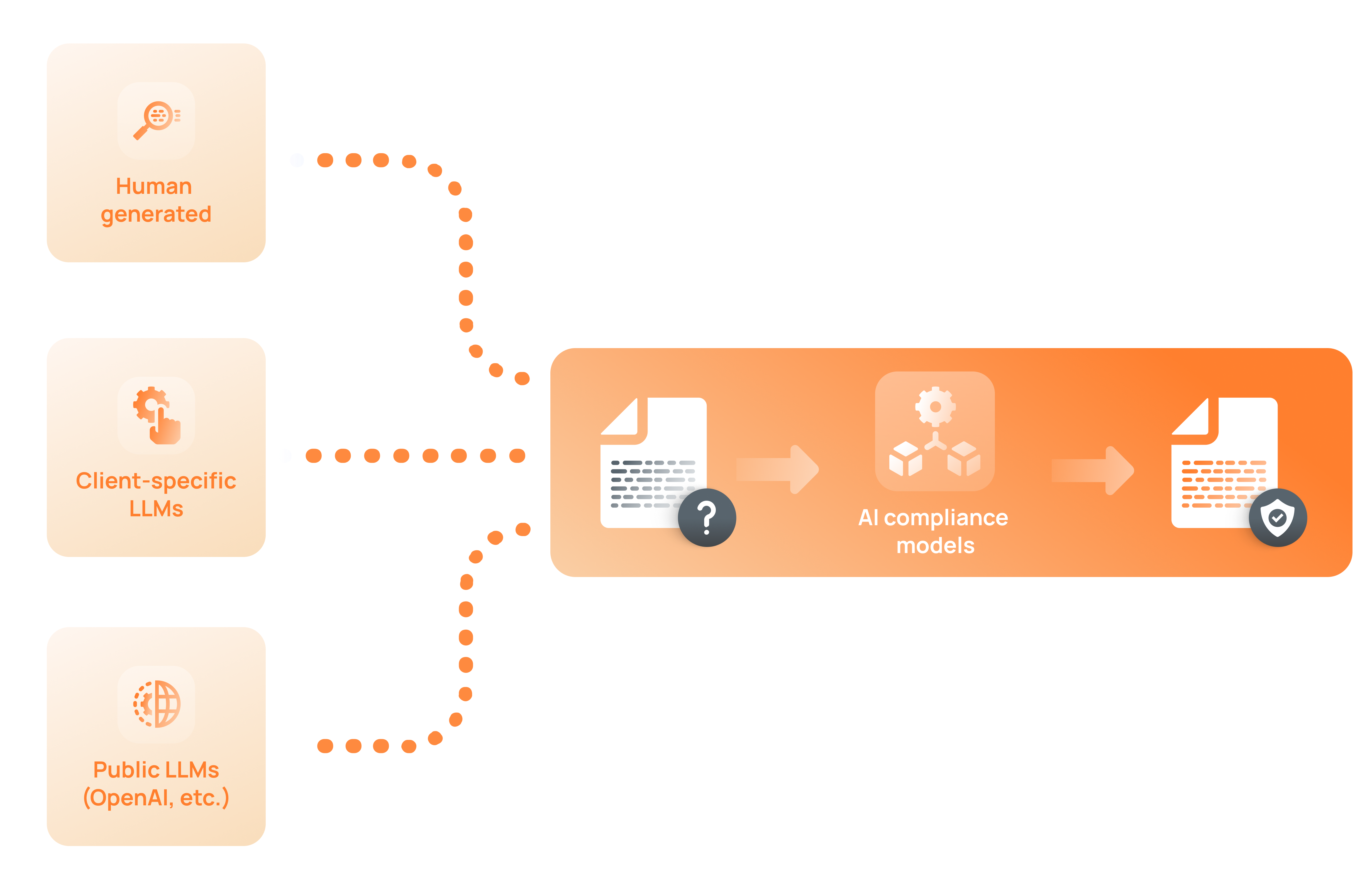

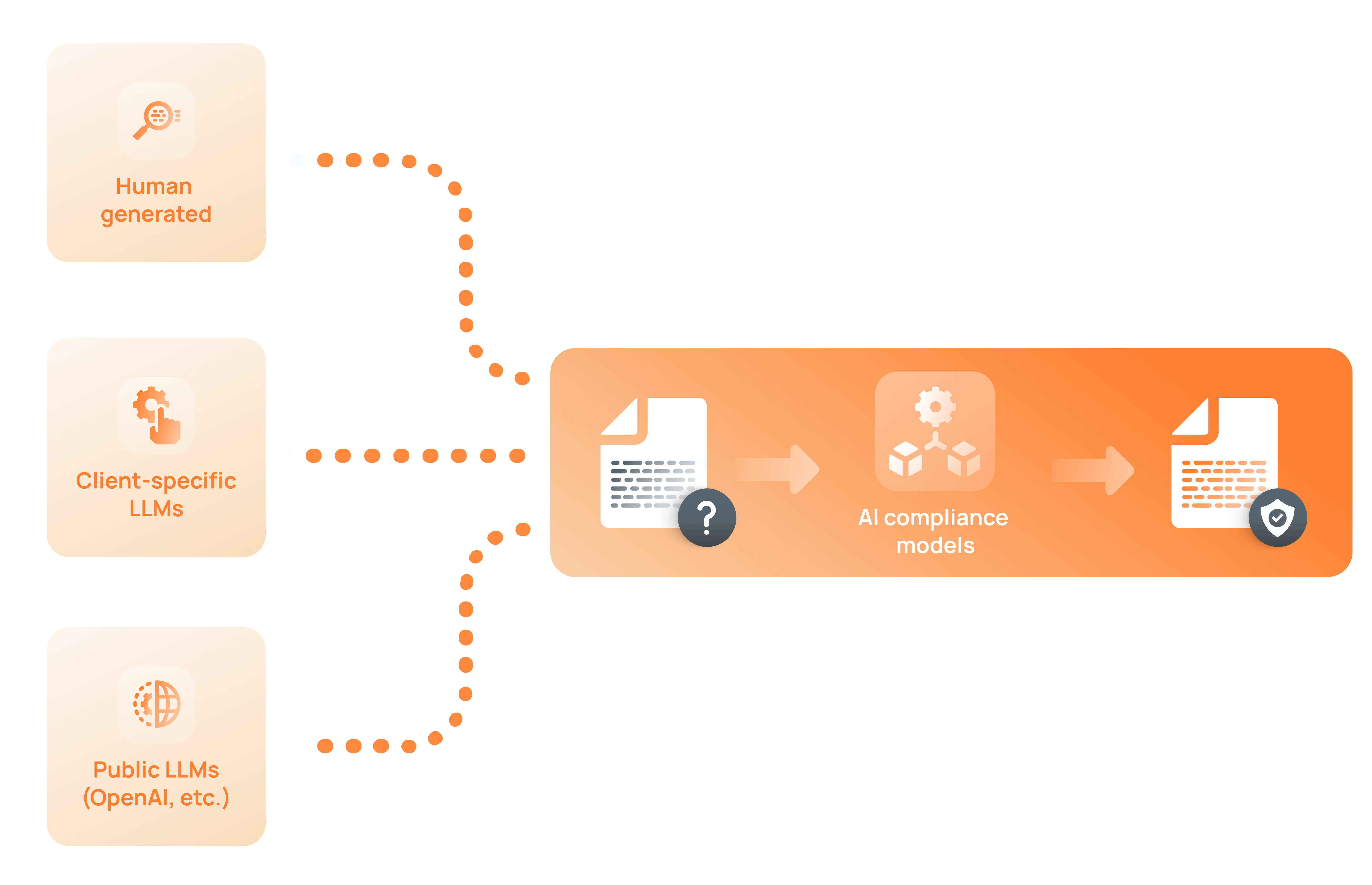

There is a way to get compliant content faster. LLMs used to create content need another level of AI to check for compliance with industry regulations. This extra AI layer can serve as a compliance screener that can flag potentially non-compliant language and propose more compliant language. With the additional layer, content can be more compliant and therefore easier to get through the checks and balances of compliance review. And that means it can get into the public domain faster.

Chatbots

Another increasingly popular business use for generative AI is chatbots—the apps on websites that answer your questions. In the past, businesses created tens of thousands of question and answer pairs. They would then use technology to match your question to one that already existed and provide the related answer. Sometimes it worked, sometimes not so much.

Now, companies can use those thousands of prepared Qs and As to train their AI models. Generative AI models are auto-regressive—they rely on past data to extrapolate and make a prediction. But, even if the training set is 100% compliant, the answers generated from the AI models might not be, so that prediction might not be compliant. Chatbots, when working correctly, provide helpful, accurate, and fast client service while saving money for the organization—a win-win. However, if the bots aren’t producing compliant answers, that introduces risk to the organization. And if each answer needs to be checked by humans, that is inefficient.

So again, the generative AI models need to be overlaid by AI models that check for compliance with industry regulations. With the extra guardrail, chatbots can create compliant answers without continuous human supervision and deliver the promised efficiencies of AI.

Considering AI solutions for your business? Ask the right questions.

Phone reps

Phone reps can also use AI tools similar to the chatbot example. The AI can provide information and talking points which the rep can use or modify during their call. The AI’s content can quickly provide more accurate information from more sources than the rep could do easily. When you have 10K or 20K reps, AI assistance can provide real time savings and help reps complete calls faster and better serve customers.

However, if the AI is providing non-complaint information to the reps, it is introducing risk. Studies have shown that humans tend to rely on the AI and trust it even when it is providing incorrect information1. As AI improves humans have been shown to get lazy and allow the AI to be a substitute, rather than an augmentation tool. Here again, AI models that screen and correct for regulations can help to reduce the risk and inefficiencies that can be created by generative AI.

Those in regulated industries should consider the full impact of any AI that they are implementing. Are they just creating more non-compliant content faster? It doesn’t have to be that way. Saifr was created to help solve this problem.

1. Dell’Acqua, Fabrizio: “Falling Asleep at the Wheel: Human/AI Collaboration in a Field Experiment on HR Recruiters.” Laboratory for Innovation Science, Harvard Business School.

Fidelity and any other third parties are independent entities and not affiliated. Mentioning them does not suggest a recommendation or endorsement by Fidelity.

1118344.1.0

-1.png)